What is Kubernetes?

Publish Date: 01 April 2024

If you are new to Kubernetes and want an introduction, this guide is for you. This guide covers the following topics:

- What is Kubernetes

- Why do we need something like Kubernetes.

- Why should you spend time learning Kubernetes.

- Brief idea about how Kubernetes works.

- What are some of the benefits that we can see when working with Kubernetes.

- When to use Kubernetes.

- How can you get started with Kubernetes.

What is Kubernetes

Kubernetes is a container orchestration platform built by Google and released to the public in 2015. A container orchestration platform will help one to manage the lifecycle of the container. It takes care of deploying, releasing, automating, scaling and operating the containers.

It is taken care of today by CNCF (Cloud Native Compute Foundation).

Why do we need something like Kubernetes?

As teams and companies started running more and more containers in production, there was a need of a platform to manage the lifecycle of these containers. This included things like:

- Being able to manage hundreds and thousands of these containers easily.

- Being able to release these containers easily using automation.

- Being able to ensure that the services available on these containers were highly available and reliable.

- Being easily able to scale these containers based on traffic requirements.

- Being able to load balance traffic across these containers easily.

- Being able to provide persistent storage to these containers.

- Being able to handle communication between different services easily due to the rise microservices.

Why should you spend time learning Kubernetes?

Kubernetes is one of the most widely used technologies today to run containers in production today. Anywhere you might go to work today, its very likely that the company would be running Kubernetes in production.

If the team you are working is running applications on Kubernetes, knowledge about this technology would be very useful to you.

Also if you have been working with containers since quite some time, you would appreciate the ease and convenience that Kubernetes brings in.

If you are aspiring to become a good devops engineer with a high package, then you should spend time learning and understanding the fundamentals of Kubernetes.

If you are a developer, you should also spend time understanding kubernetes since it would allow you to be able to debug issues when they happen in production. If you are a developer and don’t use Kubernetes in production, you would still benefit from learning good system design and architecture concepts by learning about how Kubernetes works.

Brief idea about how Kubernetes works.

In this article, I will not go into the details of the architecture of Kubernetes. However, I will give a brief overview of the architecture of Kubernetes and how it works.

High Level Architecture

Kubernetes follows a cluster architecture that includes two types of nodes:

- Master Node

- Worker Nodes

Master Node

Master nodes are nodes where services that manage the cluster are run. There is generally one master node associated with every cluster unless you are running in high availability mode in which case there might be 3 master nodes.

Worker Nodes

Worker nodes are nodes where the actual application containers are deployed. So if you a deploy an Nginx container on Kubernetes, it will land on a worker node.

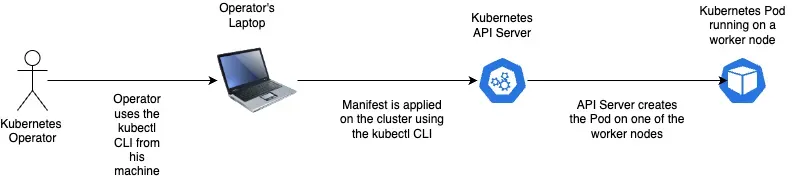

Let me walk you through the process of how you would deploy a container on Kubernetes. Lets say you want to deploy an Nginx container on Kubernetes, you would first prepare a manifest file on your local machine. This manifest file contains information about how Kubernetes should deploy the container.

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

Here is some information about the above manifest:

- API Version : self explanatory.

- Kind: This defines the kind of the resource that is deployed on Kubernetes. There can be different kinds of resources deployed on Kubernetes like Pods, Deployments, Services. A Pod resource represents a set of running containers on your cluster.

- Name: The name of the pod.

- Labels: Some labels associated with the pod for categorization and filtering purposes.

- Containers: A pod can have more than one container running inside it. Hence

containersfield takes in an array of containers that will run on the cluster. - name: the name of the container inside the pod.

- image: the docker image that will be used for the container.

- ports: The ports that have been made available on the container.

Lets say the above manifest is put into a file nginx.yaml. You can deploy this manifest to the cluster using the command

kubectl apply -f nginx.yaml

The above command makes use of the kubectl CLI. This tool is used to interact with Kubernetes cluster from the shell and is the most popular way to interact with a Kubernetes cluster. It is used in pipelines as well as local computers of devops engineers. Mastering this CLI will make you very efficient with working on Kubernetes.

This kubectl CLI interacts with the Kubernetes API Server running on the master node. The Kubernetes API Server is the bridge between the kubernetes cluster and the admin of the cluster (which is you). Whenever you want to make any changes in the cluster or look at the state of the cluster, you would interact with the Kubernetes API server.

Once this manifest reaches the Kubernetes API Server, it would take care of running the Nginx pod on one of the worker nodes. I am not going into the details of how the Kubernetes server acheives this here because it requires more explanation. I will cover it in some other article.

At the end of the above process, you would end up with a running nginx container on Kubernetes.

If you understood the process about how a container lands on Kubernetes in a running state, lets move on to understanding the benefits that we get in running this container on Kubernetes.

What are some of the benefits that we can see when working with Kubernetes

-

Self Healing: Self Healing means the ability to recover from fatal scenarios automatically without user intervention. Lets say the above Nginx container goes down for any reason such as memory issues, application crashes or underlying worker node failure, Kubernetes takes the responsiblity of bringing the container back up ensuring that there is no human intervention required.

-

Automation: the above pod can be deployed using CI/CD pipelines. There are REST APIs available in different programming languages to interact with the API Server which helps in automation of any part of the above workflow.

-

Scalability: You can ask Kubernetes to run n copies of the above container using a single command and it will take care of running the container, (provided there are sufficient amount of resources available). Kubernetes can also autoscale the number of such pods based on the CPU and memory utilization of the above containers helping in ensuring an excellent user experience.

-

Declarative Configuration: The entire specification for running a container was declared in a manifest file. This is very easy to manage, maintain and scale. You could commit these manifests to a git repository and deploy them into different environments like dev, staging and production being assured that it is the exact same configuration in each environment.

-

Open Source: Kubernetes is open source and has a very large community. This means that things move fast and any issues that you might face could be easily resolved by looking around for information online. Kubernetes also makes it very easy to deploy third party services like Kafka, Elasticsearch, etc using one click because of tried and tested manifests that are available on the internet. So if you want to run an Elasticsearch cluster, you just need to grab such manifests from the internet and apply them to your cluster.

When to use Kubernetes

It is important to understand when you must and must not use Kubernetes. Kubernetes can be costly because of the additional master node. It also requires some engineering time to setup and maintain.

If you only have a couple of services to run, I would say that you should avoid using Kubernetes and run them on a virtual machine using Docker Compose.

You want to avoid the overhead of maintaining a Kubernetes cluster just for running a couple of services. It can also become very expensive.

Also if you don’t have a lot of incoming traffic on your services, then all the properties of Kubernetes such as autoscaling, self healing do not make sense yet enough to justify the additional cost.

However if you have more than just a few services running, (like 6-7 services), along with sufficient amount of incoming traffic to justify costs to the business, then you should go ahead and operate a Kubernetes cluster.

How can you get started with a Kubernetes Cluster

To get started with a Kubernetes cluster, you can setup Minikube on your local machine and start experimenting with it.

The best resource for learning about Kubernetes can be found on Youtube. Additionally, you can also start reading the documentation.

I will soon publish more guides in this series that you can reference for learning more about Kubernetes. You can subscribe to my newsletter to get informed about it when I publish.

Hope this guide gave you a better understanding about how Kubernetes works, what are the benefits and why you must learn it.

Your man

Sagar Gulabani